A technological revolution, or evolution?

A technological revolution, or evolution?

October 2023

Introduction

ChatGPT burst onto the scene in November 2022 and we have since seen the release of GPT-4, as well as a number of other state-of-the-art models. The rate of progress in Generative AI, which describes the use of deep-learning models to create new content, including text, images, code, audio, simulations, and videos, is rapid. By training over vast quantities of content, and learning patterns using architectures with billions of parameters, the latest models are becoming increasingly capable of producing realistic outputs.

In our latest paper, we conduct an in-depth review of Generative AI’s capabilities, its potential impact on the global economy, including its influence on the realm of asset management, and we consider what the next frontier might look like. We provide a high-level summary of some of our findings below, but readers are encouraged to peruse the full academic paper, which can be downloaded here.

Despite appearances, ChatGPT did not emerge overnight.

Culmination of innovation

Despite appearances, ChatGPT (where GPT stands for “Generative Pre-trained Transformer”) did not emerge overnight. Indeed, it is the culmination of three key innovations: the Transformer model, large-scale generative pre-training, and reinforcement learning from human feedback (RLHF).

Specifically, the Transformer model introduced a powerful and efficient way to model language, utilising the concept of “attention” that allowed different words in a sequence to relate to each other – capturing the long-term dependencies necessary for language understanding. Generative pre-training involves training these Transformer models by getting them to predict the next word given the past history of words, using huge quantities of text (billions to trillions of words from across the Internet, books, etc.). With sufficiently large models and training data, this task gives models strong natural language capabilities, including the ability to generate convincing text. With RLHF, these large language models are further trained (“fine-tuned”) based on human preferences to follow instructions and provide helpful and safe responses.

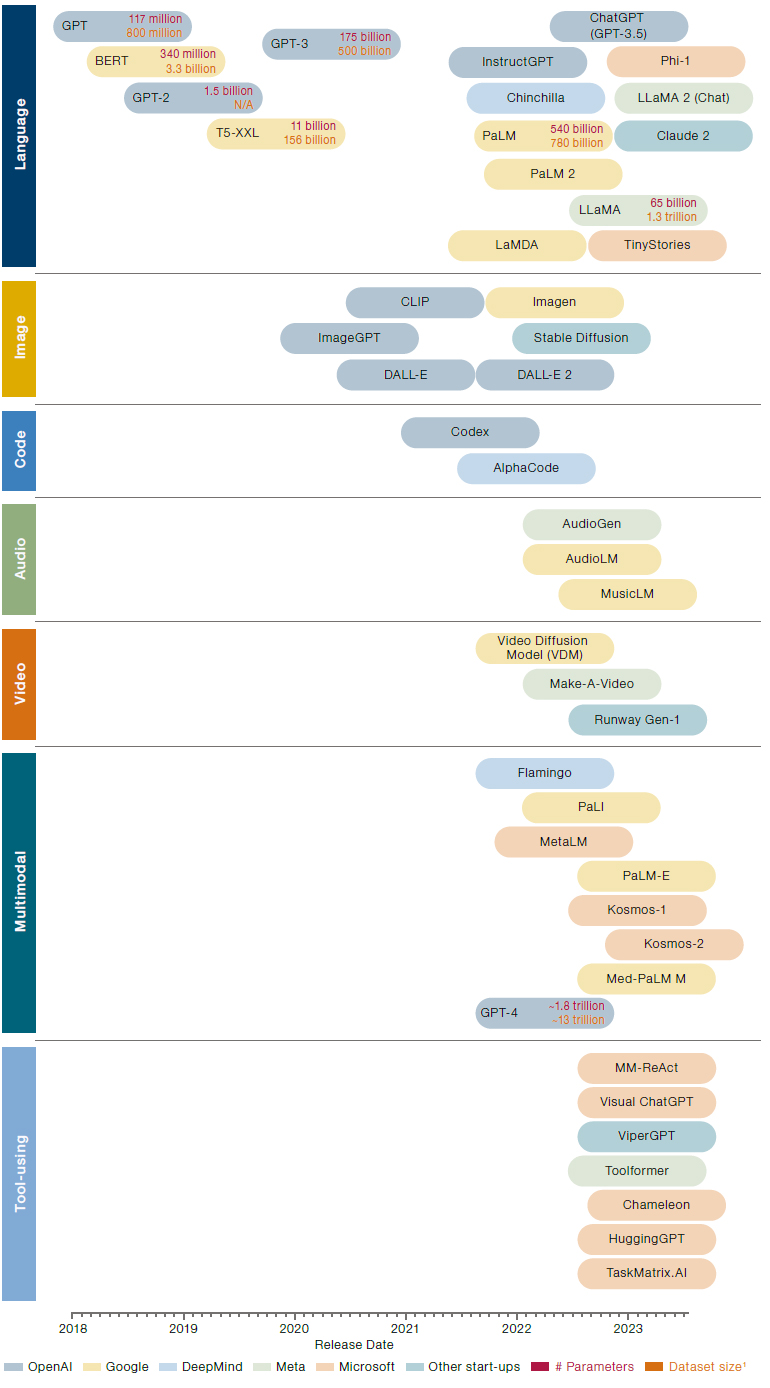

As an aside, it should be noted that there is a plethora of other large language models (LLMs) outside of ChatGPT, which differentiate themselves in terms of model size, training data and fine-tuning steps. Some of the latest models include Chinchilla from Google’s DeepMind, PaLM 2 from Google, LLaMA 2 from Meta, and Claude 2 from Anthropic.

Two important technologies, diffusion models and the CLIP (Contrastive Language-Image Pre-training) model, have driven the development of text-to-image models, such as DALL-E 2 and Stable Diffusion. Diffusion models learn how to produce realistic images by reversing a gradual noising process. By progressively adding noise to an image, the model essentially learns how to predict this noise and hence can reverse the process: start with noise and then back out an image. The CLIP model then provided a way for image models to gain an understanding of language. Combining the two together, the language-to-image concepts learnt by CLIP can be used to “interpret” a natural language prompt, which can then be used to condition a diffusion model to produce an image.

Figure 1. Innovation timeline: How did we get to where we are today?

Source: Man AHL. Includes Generative AI models covered in this paper.

Breadth of capabilities

The capabilities of Generative AI models span further than just text and images: they can also produce code, audio, music and video. Indeed, the most powerful models of today are “multimodal” models meaning that they are capable of handling multiple modalities, such as both text and images as inputs and/or outputs. These include PaLM-E (Google) and Kosmos-1 (Microsoft), which are able to take both text and image data to perform tasks such as visual question answering and image recognition, and in the case of PaLM-E, also receive sensor information as inputs to perform basic robotics tasks. The more advanced Kosmos-2 model also has visual grounding capabilities, where the model can understand and draw boxes in images to refer to text descriptions.

An example of multimodality being useful in real-life applications is the Med-PaLM M model, which is able to take a range of biomedical data modalities and perform medical question answering, classification, and report generation tasks to a quality as high or even exceeding specialist state-of-the-art models. The GPT-4 model also falls into this category, as one of the most capable models available currently.

The next frontier could be an ecosystem where a powerful multimodal model with general toolusing capabilities can connect with an API platform of tools for it to use when performing tasks.

Yet, Generative AI models themselves are only one part of the story. An increasingly powerful area of development is giving these models the ability to use tools. This kind of approach can already be seen in the plug-ins available in ChatGPT with the GPT-4 model, such as the Wolfram Alpha plug-in that allows ChatGPT to reliably perform complex mathematical calculations that it otherwise would not be able to do. Models like MM-ReAct and Visual ChatGPT take this further, giving out-of-the-box ChatGPT access to specialist visual models as “tools” for it to use (e.g., an image captioning expert, celebrity recognition expert, etc.).

An ecosystem of tool-using models: The next frontier?

So where from here? The next frontier could be an ecosystem where a powerful multimodal model with general tool-using capabilities can connect with an API platform of tools for it to use when performing tasks. This vision is outlined in the TaskMatrix.AI position paper, written by Microsoft researchers, which highlights a “clear and pressing need” to connect the general high-level planning abilities of multimodal models with specialised domain-specific models and systems. Evidence for the potential of such a future already exist: Chameleon and HuggingGPT are both model frameworks that provide a multimodal model such as GPT-4 access to a large and diverse inventory of tools (including vision models, web search, Python functions, models on Hugging Face, among others).

Potential widespread effects on the economy

Initial studies quantifying the impact of these developments on jobs have shown that the potential effects on productivity are widespread, with highly skilled, educated, higher earning, white-collar occupations more likely to be exposed to Generative AI. In law, GPT-4 has been shown to pass the Uniform Bar Examination, with academic papers and commercial solutions alike demonstrating its abilities in a range of legal tasks. There is also early evidence of GPT-4 exhibiting capabilities in accounting, while anecdotal evidence has suggested that LLMs can aid academic research, in particular, as a potential tool for generating ideas.

Initial studies quantifying the impact of these developments on jobs have shown that the potential effects on productivity are widespread.

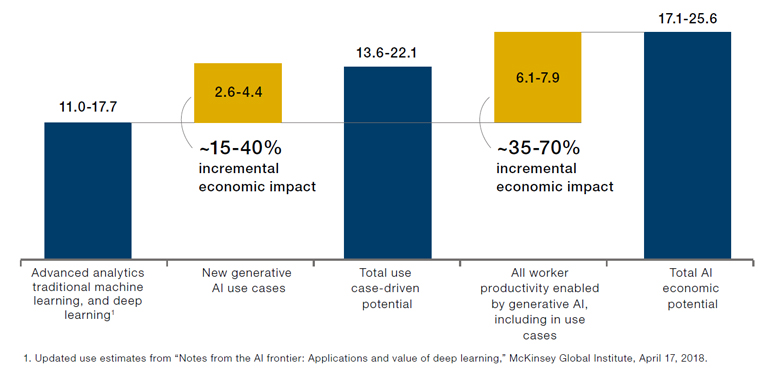

A McKinsey report has suggested that Generative AI could deliver a total value of $2.6 trillion to $4.4 trillion in economic benefits annually, equivalent to roughly 2.6%-4.4% of global GDP in 20222. The business functions that account for the bulk of this increase in value are customer operations, marketing and sales, software engineering, and R&D. These improvements in business functions result in significant impacts across all industry sectors, with banking, high tech, and life sciences among the industries with the biggest impact.

Figure 2. McKinsey’s estimate on the potential impact of AI on the global economy, $ trillion

Source: McKinsey.

Generative AI in asset management

Many of the wider impacts of Generative AI can also be applied to asset management. These can include assisting in software development and coding (such as improving data infrastructure and helping portfolio managers build quantitative models and analytics, even for those with little coding experience), as well as enabling more tailored and interactive sales and marketing campaigns. The abilities of LLMs in the legal domain are also relevant here as they can help expedite the creation and review of legal contracts, and their capacity to summarise text information can be applied to internal databases to provide answers to questions (such as those in due diligence questionnaires and RFPs).

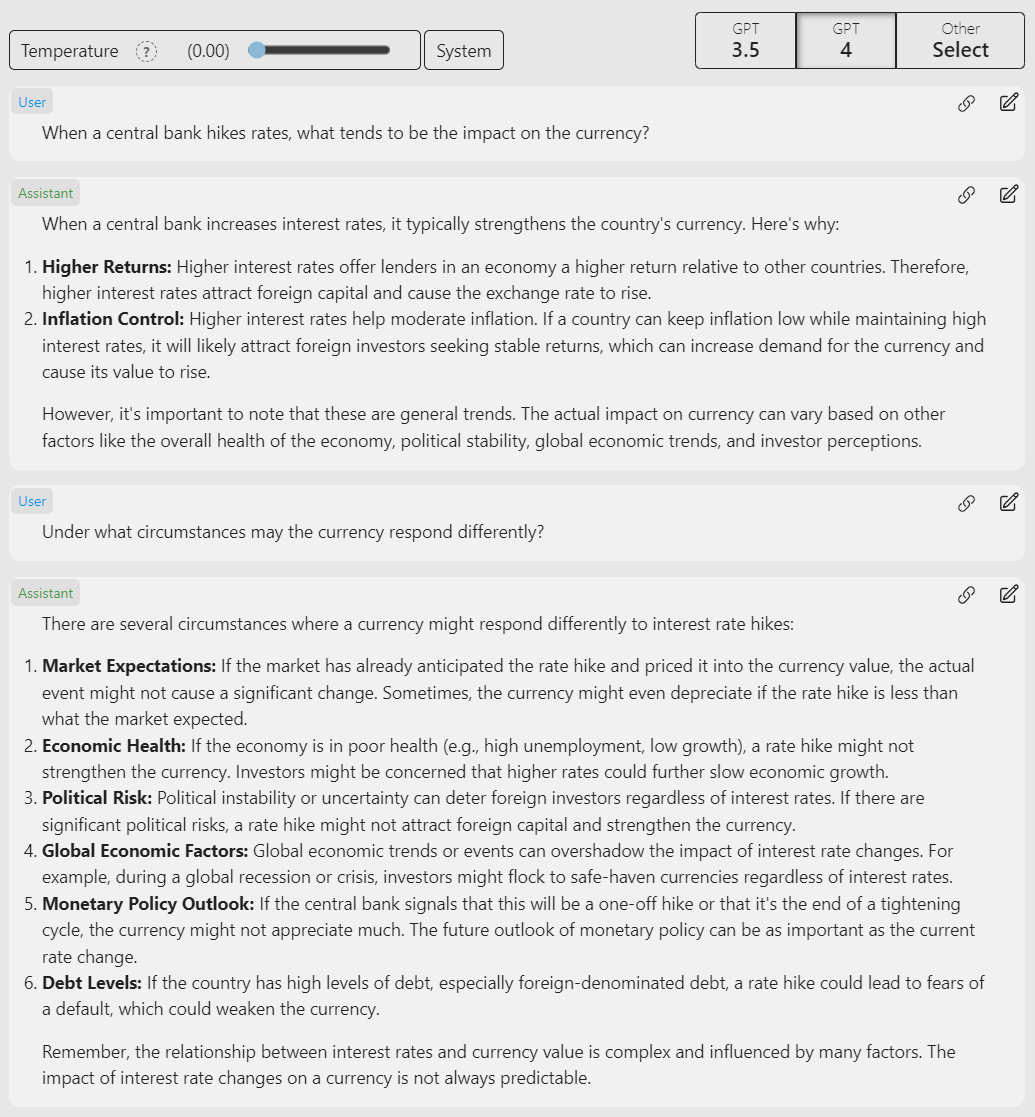

Honing in on the potential applications of Generative AI for portfolio managers, early evidence suggests that LLMs can conduct better sentiment analysis, which describes the process of analysing financial text (such as earnings call transcripts) to determine whether the tone it conveys is positive, negative or neutral, in comparison to more traditional BERT- and dictionary-based methods. Further, a recent study has shown that ChatGPT is able to effectively summarise the contents of corporate disclosures, and even exhibit the ability to produce targeted summaries that are specific to a particular topic. Another use-case for LLMs in asset management is their ability to identify links between conceptual themes and company descriptions to create thematic baskets of stocks.

Outside of LLMs, GANs (Generative Adversarial Networks) are another kind of Generative AI model that can create synthetic financial timeseries data. Studies have shown that GANs can produce price data that exhibit certain stylised facts that mirror empirical data (such as fat-tailed distributions and volatility clustering), with state-of-the-art applications including using GANs in estimating tail risk (by generating realistic synthetic tail scenarios). GANs have also been used in portfolio construction and strategy hyperparameter tuning, as well as in creating synthetic order book data.

Other applications in asset management include the use of LLMs in generating economic hypotheses to build and evaluate systematic macro trading signals, potentially using ChatGPT as a financial adviser, and using Generative AI models in supervised contexts.

Figure 3. Example output from GPT-4 when asked an economic question

Source: ChatGPT.

Not without its dangers

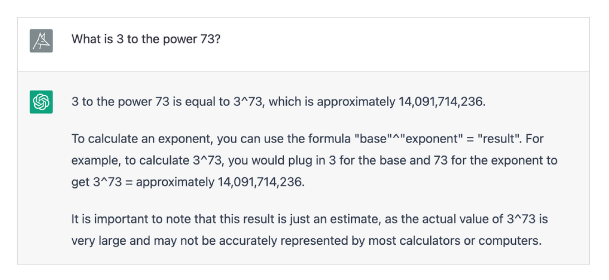

Clearly, the impact for Generative AI is potentially both broad and transformative across asset management and a range of other industries. However, an analysis of Generative AI would not be complete without reference to its dangers, with hallucinations - when LLMs generate incorrect, made-up content that can sound plausible and convincing - being particularly important.

Figure 4. ChatGPT hallucinating a mathematical answer (correct answer is 6.7585×10^34)

Source: S. Wolfram, “Wolfram|Alpha as the Way to Bring Computational Knowledge Superpowers to ChatGPT,” 9 January 2023. [Online]. Available: writings.stephenwolfram.com/2023/01/wolframalpha-as-the-way-to-bring-computationalknowledge-superpowers-to-chatgpt/ .

Generative AI models also tend to amplify the biases and stereotypes prevalent in their training data and can perpetuate harmful or toxic content that may be present. There are also issues of data privacy and copyright infringement, as Generative AI models can unintentionally reveal private, sensitive, or copyrighted information. These powerful models can also be used for malicious purposes, most notably making disinformation campaigns highly effective and cheap to run, along with facilitating frauds/scams.

A plethora of other ethical and social issues must also be considered, including the environmental cost of running highly computationally intensive model training and inference, various geopolitical issues, unpredictable emergent behaviours of more powerful models, and the proliferation of synthetic data on the Internet that can be a concern for training future models.

A future world of widespread Generative AI adoption requires cooperation between model developers, model users, regulators, and policymakers alike, to foster an environment where the powers of Generative AI are safely and responsibly utilised for a more productive society.

Development in the space of Generative AI is rapid, and the future of the technology is highly unpredictable.

Conclusion

Development in the space of Generative AI is rapid, and the future of the technology is highly unpredictable. The Transformer model was only introduced in 2017, and even the GPT-2 model released in 2019 was described by its OpenAI researchers as “far from useable” in terms of practical applications. Since ChatGPT emerged onto the scene in November 2022, GPT-4 has been released, and a number of the state-of-the-art models mentioned above have only arrived this year. The rate of progress is significant, not just in the scale of models and training data, but also in the development of multiple modalities and the use of tools. However, the future is highly uncertain: what kind of new abilities will models develop? What will be the key limiting factors to even better performance? What will be the most important applications in asset management? Only time will tell.

This has been a whistle stop tour of some of our key findings from the original paper on Generative AI. The paper was prepared for Man Group’s Academic Advisory Board , where leading financial academics and representatives from across the firm’s investment engines meet to discuss a given topic.

The full paper, which includes a list of all academic papers referenced, can be downloaded for free from SSRN here.

1. Approximate training corpus size in number of reported words/tokens/images.

2. data.worldbank.org/indicator/NY.GDP.MKTP.CD

You are now exiting our website

Please be aware that you are now exiting the Man Institute | Man Group website. Links to our social media pages are provided only as a reference and courtesy to our users. Man Institute | Man Group has no control over such pages, does not recommend or endorse any opinions or non-Man Institute | Man Group related information or content of such sites and makes no warranties as to their content. Man Institute | Man Group assumes no liability for non Man Institute | Man Group related information contained in social media pages. Please note that the social media sites may have different terms of use, privacy and/or security policy from Man Institute | Man Group.